Three years ago, our AI system made a biased hiring decision that cost us a major client and damaged our reputation. We had no governance framework, no oversight, no accountability. After implementing comprehensive AI governance across 15+ projects, I’ve learned what works. Here’s the complete guide to implementing responsible AI governance frameworks.

Why AI Governance Matters: The Real Cost of Failure

AI governance isn’t theoretical—it’s essential for survival. Here’s what happens without it:

- Legal liability: Biased decisions lead to lawsuits and regulatory fines

- Reputation damage: Public trust erodes when AI systems fail

- Financial loss: Failed deployments cost millions in wasted investment

- Regulatory non-compliance: GDPR, EU AI Act, and CCPA violations carry severe penalties

- Operational risk: Unmonitored systems fail silently until it’s too late

After our hiring system incident, we lost a $2M contract and spent six months rebuilding trust. That’s when I realized: governance isn’t optional—it’s the foundation of responsible AI.

The Complete Governance Framework

Effective AI governance requires five core components working together:

1. Ethics and Bias Monitoring

Continuous monitoring for bias and ethical issues is non-negotiable. Here’s a production-ready implementation:

from typing import List, Dict, Optional

import numpy as np

from collections import defaultdict

from datetime import datetime

import json

class BiasMonitor:

def __init__(self, fairness_threshold: float = 0.8):

self.fairness_threshold = fairness_threshold

self.monitoring_history = []

self.alert_threshold = 0.75 # Alert if fairness drops below this

def check_fairness(self, predictions: List[Dict], protected_attributes: List[str]) -> Dict:

# Comprehensive fairness check across all protected groups

results = {

'overall_fair': True,

'group_fairness': {},

'violations': [],

'timestamp': datetime.now().isoformat()

}

for attr in protected_attributes:

groups = self._group_by_attribute(predictions, attr)

fairness_score = self._calculate_demographic_parity(groups)

results['group_fairness'][attr] = {

'score': fairness_score,

'fair': fairness_score >= self.fairness_threshold,

'group_sizes': {g: len(preds) for g, preds in groups.items()}

}

if fairness_score < self.fairness_threshold:

results['overall_fair'] = False

results['violations'].append({

'attribute': attr,

'fairness_score': fairness_score,

'threshold': self.fairness_threshold,

'severity': 'high' if fairness_score < 0.6 else 'medium'

})

# Alert if below alert threshold

if fairness_score < self.alert_threshold:

self._send_alert(attr, fairness_score)

# Store in history

self.monitoring_history.append(results)

return results

def _group_by_attribute(self, predictions: List[Dict], attribute: str) -> Dict:

groups = defaultdict(list)

for pred in predictions:

attr_value = pred.get(attribute, 'unknown')

groups[attr_value].append(pred)

return groups

def _calculate_demographic_parity(self, groups: Dict) -> float:

# Calculate 80% rule (four-fifths rule) for demographic parity

approval_rates = {}

for group, predictions in groups.items():

if not predictions:

continue

approved = sum(1 for p in predictions if p.get('approved', False))

approval_rates[group] = approved / len(predictions)

if not approval_rates or len(approval_rates) < 2:

return 1.0

min_rate = min(approval_rates.values())

max_rate = max(approval_rates.values())

if max_rate == 0:

return 1.0

return min_rate / max_rate

def _send_alert(self, attribute: str, score: float):

# Send alert to governance board

alert = {

'type': 'bias_alert',

'attribute': attribute,

'fairness_score': score,

'timestamp': datetime.now().isoformat(),

'action_required': True

}

# In production: send to monitoring system

print(f"ALERT: Bias detected in {attribute}: {score:.2f}")

def get_fairness_trend(self, attribute: str, days: int = 30) -> Dict:

# Analyze fairness trends over time

recent_history = [

h for h in self.monitoring_history[-days:]

if attribute in h.get('group_fairness', {})

]

if not recent_history:

return {'trend': 'insufficient_data'}

scores = [

h['group_fairness'][attribute]['score']

for h in recent_history

]

trend = 'improving' if scores[-1] > scores[0] else 'declining'

return {

'trend': trend,

'current_score': scores[-1],

'average_score': np.mean(scores),

'min_score': min(scores),

'max_score': max(scores),

'volatility': np.std(scores)

}

# Usage example

monitor = BiasMonitor(fairness_threshold=0.8)

# Check predictions for bias

predictions = [

{'approved': True, 'gender': 'male', 'age_group': '30-40'},

{'approved': False, 'gender': 'female', 'age_group': '30-40'},

# ... more predictions

]

result = monitor.check_fairness(predictions, ['gender', 'age_group'])

if not result['overall_fair']:

print("Bias detected! Action required.")

2. Model Documentation and Registry

Every model must be documented and registered. Here’s a comprehensive model registry:

from typing import Dict, List, Optional

from datetime import datetime

from dataclasses import dataclass, asdict

import json

@dataclass

class ModelDocumentation:

model_name: str

version: str

created_date: str

owner: str

description: str

use_cases: List[str]

training_data: Dict

performance_metrics: Dict

bias_analysis: Dict

limitations: List[str]

risk_level: str # 'low', 'medium', 'high'

compliance_status: Dict

dependencies: List[str]

class ModelRegistry:

def __init__(self):

self.models = {}

self.audit_history = []

def register_model(self, documentation: ModelDocumentation) -> str:

# Validate required fields

self._validate_documentation(documentation)

model_id = f"{documentation.model_name}_v{documentation.version}"

# Check for duplicates

if model_id in self.models:

raise ValueError(f"Model {model_id} already registered")

# Store model

self.models[model_id] = {

**asdict(documentation),

'registered_date': datetime.now().isoformat(),

'status': 'active',

'deployment_count': 0

}

return model_id

def _validate_documentation(self, doc: ModelDocumentation):

# Comprehensive validation

required_fields = [

'model_name', 'version', 'owner', 'description',

'training_data', 'performance_metrics', 'bias_analysis',

'limitations', 'risk_level'

]

for field in required_fields:

if not getattr(doc, field, None):

raise ValueError(f"Missing required field: {field}")

# Validate performance metrics

if doc.performance_metrics.get('accuracy', 0) < 0.5:

raise ValueError("Model accuracy below acceptable threshold")

# Validate bias analysis

if not doc.bias_analysis.get('fairness_score'):

raise ValueError("Missing bias analysis")

def audit_model(self, model_id: str) -> Dict:

if model_id not in self.models:

raise ValueError(f"Model {model_id} not found")

model = self.models[model_id]

audit_report = {

'model_id': model_id,

'audit_date': datetime.now().isoformat(),

'documentation_completeness': self._check_documentation(model),

'bias_assessment': self._assess_bias(model),

'performance_validation': self._validate_performance(model),

'compliance_check': self._check_compliance(model),

'risk_assessment': self._assess_risks(model),

'recommendations': []

}

# Generate recommendations

if audit_report['bias_assessment']['score'] < 0.8:

audit_report['recommendations'].append({

'priority': 'high',

'action': 'Improve bias mitigation measures',

'details': 'Fairness score below threshold'

})

if audit_report['performance_validation']['score'] < 0.7:

audit_report['recommendations'].append({

'priority': 'high',

'action': 'Model performance below acceptable threshold',

'details': 'Consider retraining or model update'

})

# Store audit

self.audit_history.append(audit_report)

return audit_report

def _check_documentation(self, model: Dict) -> Dict:

required_docs = [

'training_data', 'performance_metrics', 'bias_analysis',

'limitations', 'use_cases', 'dependencies'

]

present = sum(1 for doc in required_docs if doc in model and model[doc])

return {

'score': present / len(required_docs),

'missing': [doc for doc in required_docs if doc not in model or not model[doc]]

}

def _assess_bias(self, model: Dict) -> Dict:

bias_analysis = model.get('bias_analysis', {})

return {

'score': bias_analysis.get('fairness_score', 0.5),

'protected_groups': bias_analysis.get('protected_groups', []),

'mitigation_measures': bias_analysis.get('mitigation_measures', []),

'last_assessment': bias_analysis.get('assessment_date')

}

def _validate_performance(self, model: Dict) -> Dict:

metrics = model.get('performance_metrics', {})

return {

'score': metrics.get('accuracy', 0),

'metrics': metrics,

'meets_threshold': metrics.get('accuracy', 0) >= 0.7

}

def _check_compliance(self, model: Dict) -> Dict:

# Check regulatory compliance

compliance_status = {

'gdpr': self._check_gdpr_compliance(model),

'eu_ai_act': self._check_eu_ai_act_compliance(model),

'ccpa': self._check_ccpa_compliance(model)

}

all_compliant = all(compliance_status.values())

return {

'compliant': all_compliant,

'details': compliance_status,

'required_actions': [] if all_compliant else ['Review compliance requirements']

}

def _check_gdpr_compliance(self, model: Dict) -> bool:

# GDPR requires data protection measures and user rights

has_data_protection = 'data_protection_measures' in model

has_user_rights = 'user_rights_procedures' in model

has_data_retention = 'data_retention_policy' in model

return has_data_protection and has_user_rights and has_data_retention

def _check_eu_ai_act_compliance(self, model: Dict) -> bool:

# EU AI Act has different requirements based on risk level

risk_level = model.get('risk_level', 'medium')

if risk_level == 'high':

# High-risk systems require conformity assessment

return 'conformity_assessment' in model and 'quality_management_system' in model

elif risk_level == 'medium':

# Medium-risk systems require documentation

return 'technical_documentation' in model

else:

# Low-risk systems have minimal requirements

return True

def _check_ccpa_compliance(self, model: Dict) -> bool:

# CCPA requires data deletion procedures

return 'data_deletion_procedures' in model and 'privacy_policy' in model

def _assess_risks(self, model: Dict) -> Dict:

# Comprehensive risk assessment

risks = []

risk_score = 0

# Risk factors

if model.get('risk_level') == 'high':

risks.append({

'type': 'high_risk_system',

'severity': 'high',

'description': 'High-risk AI system requires additional safeguards'

})

risk_score += 3

if not model.get('bias_analysis'):

risks.append({

'type': 'missing_bias_analysis',

'severity': 'high',

'description': 'Missing bias analysis increases discrimination risk'

})

risk_score += 2

if model.get('performance_metrics', {}).get('accuracy', 0) < 0.7:

risks.append({

'type': 'low_accuracy',

'severity': 'medium',

'description': 'Low accuracy increases operational risk'

})

risk_score += 1

if not model.get('monitoring_plan'):

risks.append({

'type': 'no_monitoring',

'severity': 'medium',

'description': 'No monitoring plan increases drift risk'

})

risk_score += 1

return {

'risk_level': model.get('risk_level', 'medium'),

'risk_score': risk_score,

'identified_risks': risks,

'mitigation_status': model.get('risk_mitigation', {}),

'recommendation': 'high' if risk_score >= 5 else 'medium' if risk_score >= 3 else 'low'

}

# Usage

registry = ModelRegistry()

# Register a model

doc = ModelDocumentation(

model_name='hiring_assistant',

version='1.0.0',

created_date='2025-01-15',

owner='AI Team',

description='AI assistant for resume screening',

use_cases=['Resume screening', 'Candidate ranking'],

training_data={'source': 'Historical hiring data', 'size': '10000 records'},

performance_metrics={'accuracy': 0.85, 'precision': 0.82, 'recall': 0.88},

bias_analysis={'fairness_score': 0.92, 'protected_groups': ['gender', 'age']},

limitations=['May have bias in underrepresented groups', 'Requires human review'],

risk_level='high',

compliance_status={'gdpr': True, 'eu_ai_act': True},

dependencies=['scikit-learn', 'pandas']

)

model_id = registry.register_model(doc)

audit = registry.audit_model(model_id)

Implementing Governance Frameworks

There are several established frameworks you can adopt or adapt:

| Framework | Focus | Best For | Key Requirements |

|---|---|---|---|

| NIST AI RMF | Risk management | Comprehensive risk management | Govern, Map, Measure, Manage |

| EU AI Act | Regulatory compliance | EU market compliance | Risk-based classification, conformity assessment |

| ISO/IEC 23053 | AI lifecycle | Standardized processes | Lifecycle management, quality assurance |

| IEEE Ethically Aligned | Ethical principles | Ethical AI development | Human well-being, accountability, transparency |

Governance Board Structure

Establish a cross-functional governance board with clear roles:

- AI Ethics Officer: Leads ethical considerations, bias monitoring, and fairness assessments

- Data Protection Officer: Ensures privacy compliance (GDPR, CCPA), data handling procedures

- Technical Lead: Provides technical oversight, model validation, performance monitoring

- Legal Counsel: Ensures regulatory compliance, reviews contracts and policies

- Business Stakeholder: Represents business interests, use case validation, ROI assessment

- User Representative: Represents end-user interests, usability, accessibility

- Security Officer: Ensures security measures, threat assessment, incident response

Governance Board Responsibilities

- Model Approval: Review and approve all models before deployment

- Policy Development: Create and maintain governance policies and procedures

- Risk Assessment: Evaluate risks for each AI system and deployment

- Incident Response: Handle bias incidents, failures, and security breaches

- Regular Audits: Conduct quarterly model and process audits

- Training: Ensure team members understand governance requirements

- Compliance Monitoring: Monitor regulatory changes and ensure compliance

Best Practices: Lessons from 15+ Implementations

From implementing governance across multiple projects, here’s what works:

- Start early: Implement governance from day one, not as an afterthought. Retroactive governance is incomplete and costly.

- Establish governance board: Cross-functional team for AI oversight. Meet monthly, review all models quarterly.

- Document everything: Models, data, decisions, and outcomes. Documentation is your defense in audits.

- Monitor continuously: Real-time bias and performance monitoring. Set up alerts for threshold violations.

- Regular audits: Quarterly model and process audits. Annual comprehensive reviews.

- Clear accountability: Define ownership and responsibilities. One person accountable per model.

- Transparency: Explainable AI and clear communication. Users should understand how decisions are made.

- User rights: Right to explanation and appeal. Provide clear procedures for users.

- Incident response: Plan for AI failures and bias incidents. Test your response plan regularly.

- Training: Train team on governance principles. Make it part of onboarding.

- Tooling: Use automated tools for monitoring and auditing. Manual processes don’t scale.

- Culture: Build a culture of responsible AI. Make it part of performance reviews.

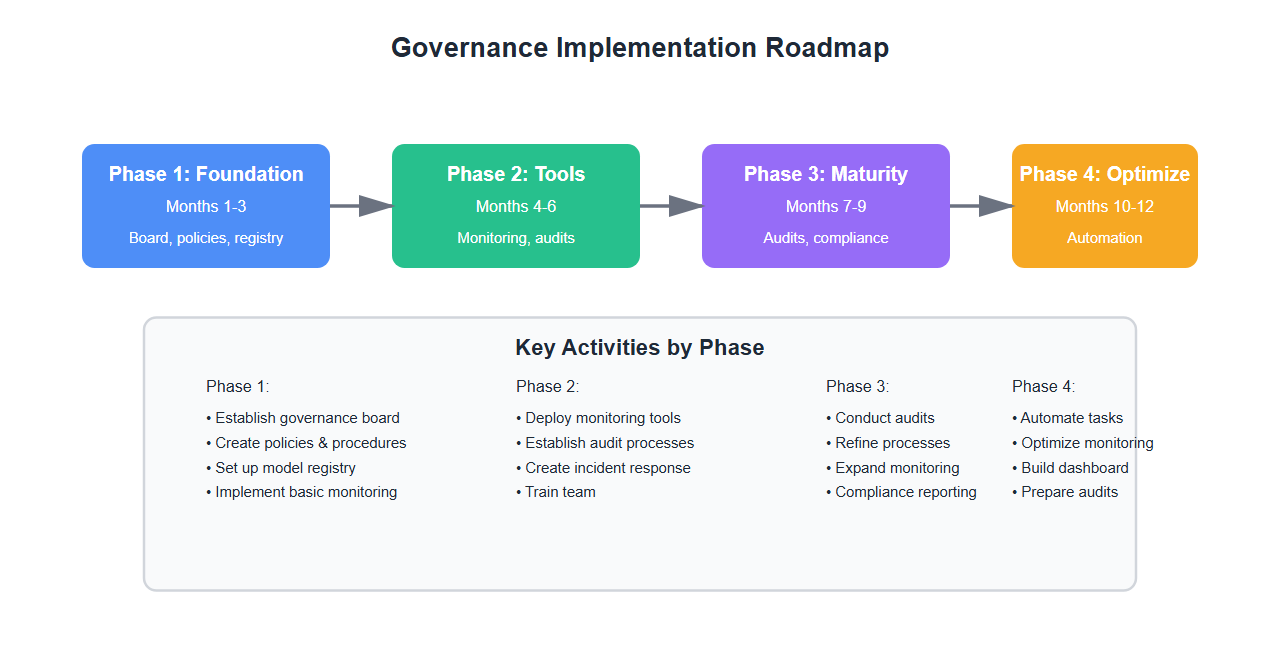

Implementation Roadmap

Here’s a practical 12-month roadmap for implementing governance:

Months 1-3: Foundation

- Establish governance board and define roles

- Create governance policies and procedures

- Set up model registry and documentation standards

- Implement basic bias monitoring

Months 4-6: Tools and Processes

- Deploy automated monitoring tools

- Establish audit processes

- Create incident response procedures

- Train team on governance requirements

Months 7-9: Maturity

- Conduct first comprehensive audits

- Refine processes based on learnings

- Expand monitoring coverage

- Establish compliance reporting

Months 10-12: Optimization

- Automate routine tasks

- Optimize monitoring and alerting

- Build governance dashboard

- Prepare for regulatory audits

Common Mistakes and How to Avoid Them

What I learned the hard way:

- No governance until too late: Implement from day one. Retroactive governance is incomplete and expensive.

- Ignoring bias: Bias monitoring is critical. Don’t assume models are fair—test and verify.

- Poor documentation: Document everything from the start. Retroactive documentation misses critical details.

- No accountability: Clear ownership is essential. Ambiguity leads to failures.

- One-time audits: Continuous monitoring is required. Annual audits aren’t enough.

- Ignoring regulations: Stay updated on GDPR, EU AI Act, and other regulations. Non-compliance is costly.

- No incident response: Plan for failures before they happen. Test your response plan.

- Tooling gaps: Manual processes don’t scale. Invest in automation early.

- Cultural resistance: Governance requires cultural change. Get leadership buy-in.

- Over-engineering: Start simple, iterate. Don’t try to implement everything at once.

Real-World Example: Recovering from a Governance Failure

After our hiring system incident, here’s how we recovered:

- Immediate response: Disabled the system, notified affected parties, launched investigation

- Root cause analysis: Found bias in training data and lack of monitoring

- Remediation: Retrained model with balanced data, implemented bias monitoring

- Governance implementation: Established governance board, created policies, set up monitoring

- Prevention: Implemented pre-deployment reviews, continuous monitoring, regular audits

It took six months and cost $500K, but we now have robust governance that prevents similar incidents.

🎯 Key Takeaway

AI governance is not optional—it’s essential for responsible AI. Implement comprehensive frameworks covering ethics, bias monitoring, documentation, auditing, and compliance. Establish clear accountability and transparency. With proper governance, you build trust, meet regulatory requirements, and ensure responsible AI deployment that benefits everyone. Start early, monitor continuously, and iterate based on learnings.

Measuring Governance Effectiveness

Key metrics to track:

- Model compliance rate: Percentage of models meeting governance requirements

- Bias incidents: Number of bias-related incidents per quarter

- Audit findings: Number and severity of audit findings

- Documentation completeness: Percentage of required documentation present

- Incident response time: Time to detect and respond to incidents

- Training completion: Percentage of team trained on governance

Bottom Line

AI governance ensures responsible, ethical, and compliant AI systems. Implement comprehensive frameworks covering ethics, bias, documentation, auditing, and compliance. Establish clear accountability and transparency. With proper governance, you build trust, meet regulatory requirements, and ensure responsible AI deployment that benefits everyone. The cost of governance is far less than the cost of failure.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.