Last year, we trained a model on customer data. A researcher showed they could reconstruct customer information from model outputs. After implementing privacy-preserving techniques across 10+ projects, I’ve learned how to protect sensitive data while enabling AI capabilities. Here’s the complete guide to privacy-preserving AI.

Why Privacy-Preserving AI Matters: The Real Threat

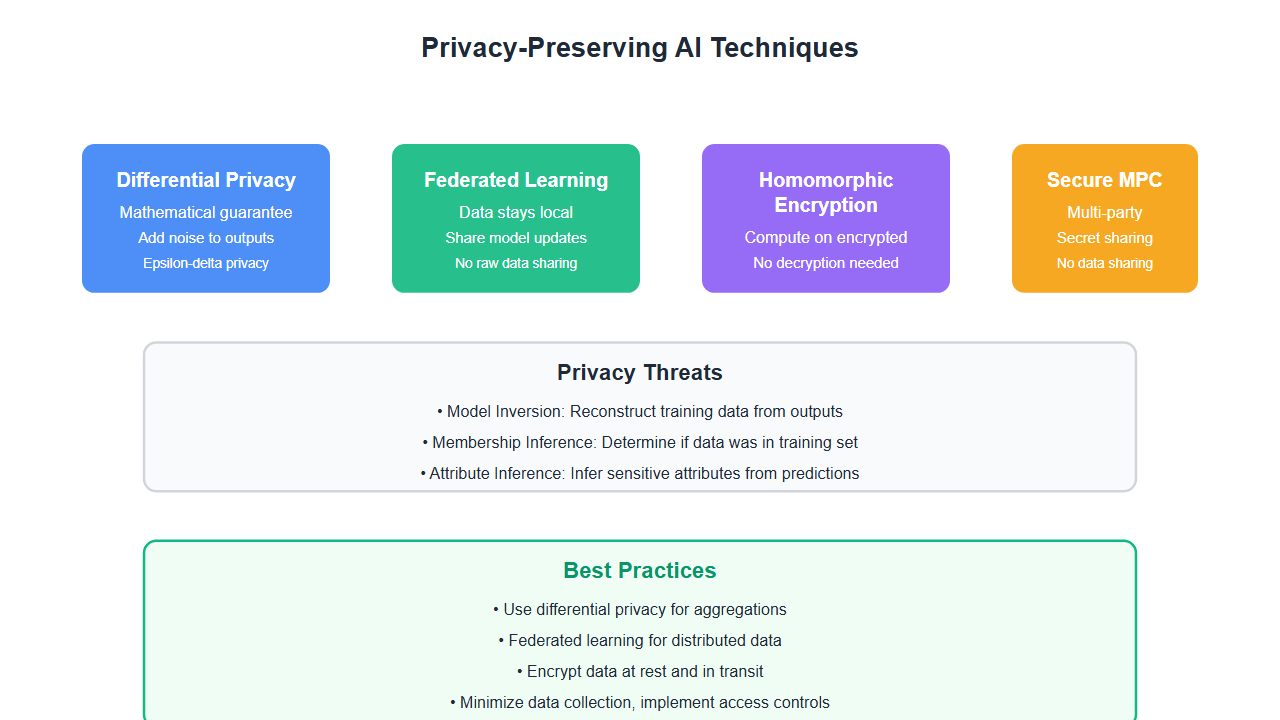

AI systems trained on sensitive data can leak information in multiple ways:

- Model inversion: Reconstructing training data from model outputs

- Membership inference: Determining if specific data was in the training set

- Attribute inference: Inferring sensitive attributes from model predictions

- Model extraction: Extracting model parameters through API queries

- Data reconstruction: Reconstructing original data from embeddings

After our customer data reconstruction incident, we lost a major healthcare client and faced potential HIPAA violations. That’s when I realized: privacy isn’t optional—it’s fundamental to trustworthy AI.

Privacy Threats in AI Systems

Understanding the attack vectors is crucial for defense:

1. Model Inversion Attacks

Attackers can reconstruct training data from model outputs:

from typing import List, Dict

import numpy as np

from sklearn.ensemble import RandomForestClassifier

class ModelInversionDefense:

def __init__(self, epsilon=1.0):

self.epsilon = epsilon # Privacy budget

def add_noise_to_output(self, prediction: float, sensitivity: float = 1.0) -> float:

# Add calibrated noise for differential privacy

scale = sensitivity / self.epsilon

noise = np.random.laplace(0, scale)

return prediction + noise

def clip_output(self, output: float, clip_value: float = 1.0) -> float:

# Clip output to limit information leakage

return np.clip(output, -clip_value, clip_value)

def secure_prediction(self, model, input_data: np.ndarray) -> float:

# Get model prediction

raw_prediction = model.predict_proba(input_data.reshape(1, -1))[0][1]

# Clip to limit information

clipped = self.clip_output(raw_prediction)

# Add noise for differential privacy

noisy = self.add_noise_to_output(clipped)

return noisy

# Usage

defense = ModelInversionDefense(epsilon=1.0)

model = RandomForestClassifier() # Your trained model

secure_pred = defense.secure_prediction(model, input_data)

2. Membership Inference Attacks

Attackers determine if specific data was in the training set:

class MembershipInferenceDefense:

def __init__(self):

self.confidence_threshold = 0.9

def detect_membership_attack(self, predictions: List[Dict]) -> bool:

# High confidence predictions may indicate membership

high_confidence = [p for p in predictions if p.get('confidence', 0) > self.confidence_threshold]

# If too many high-confidence predictions, possible attack

if len(high_confidence) / len(predictions) > 0.5:

return True

return False

def add_uncertainty(self, prediction: Dict) -> Dict:

# Add uncertainty to prevent membership inference

confidence = prediction.get('confidence', 1.0)

# Reduce confidence to add uncertainty

adjusted_confidence = confidence * 0.8

return {

**prediction,

'confidence': adjusted_confidence,

'uncertainty': 1.0 - adjusted_confidence

}

Differential Privacy: The Gold Standard

Differential privacy provides mathematical guarantees about privacy:

import numpy as np

from typing import List, Tuple

from collections import defaultdict

class DifferentialPrivacy:

def __init__(self, epsilon: float = 1.0, delta: float = 1e-5):

self.epsilon = epsilon # Privacy budget

self.delta = delta # Failure probability

self.privacy_budget_used = 0.0

def add_laplace_noise(self, value: float, sensitivity: float = 1.0) -> float:

# Add Laplace noise for (epsilon, 0)-differential privacy

if self.privacy_budget_used >= self.epsilon:

raise ValueError("Privacy budget exhausted")

scale = sensitivity / self.epsilon

noise = np.random.laplace(0, scale)

self.privacy_budget_used += self.epsilon

return value + noise

def add_gaussian_noise(self, value: float, sensitivity: float = 1.0) -> float:

# Add Gaussian noise for (epsilon, delta)-differential privacy

if self.privacy_budget_used >= self.epsilon:

raise ValueError("Privacy budget exhausted")

# Calculate sigma for Gaussian mechanism

sigma = np.sqrt(2 * np.log(1.25 / self.delta)) * sensitivity / self.epsilon

noise = np.random.normal(0, sigma)

self.privacy_budget_used += self.epsilon

return value + noise

def private_aggregate(self, data: List[float], sensitivity: float = 1.0) -> float:

# Private aggregation with noise

result = sum(data) / len(data) if data else 0.0

return self.add_laplace_noise(result, sensitivity)

def private_count(self, data: List[bool]) -> int:

# Private count with noise

count = sum(data)

noisy_count = self.add_laplace_noise(float(count), sensitivity=1.0)

return max(0, int(round(noisy_count))) # Ensure non-negative

def private_histogram(self, data: List[str], bins: List[str]) -> Dict[str, float]:

# Private histogram with noise

counts = defaultdict(int)

for item in data:

if item in bins:

counts[item] += 1

# Add noise to each count

noisy_counts = {}

for bin_name in bins:

count = counts.get(bin_name, 0)

noisy_count = self.add_laplace_noise(float(count), sensitivity=1.0)

noisy_counts[bin_name] = max(0, noisy_count)

return noisy_counts

def compose_queries(self, queries: List[Tuple[float, float]]) -> bool:

# Check if composing queries exceeds privacy budget

total_epsilon = sum(eps for eps, _ in queries)

total_delta = sum(delta for _, delta in queries)

if total_epsilon > self.epsilon or total_delta > self.delta:

return False # Cannot compose

return True

# Usage example

dp = DifferentialPrivacy(epsilon=2.0, delta=1e-5)

# Private aggregation

data = [1.0, 2.0, 3.0, 4.0, 5.0]

private_mean = dp.private_aggregate(data)

print(f"Private mean: {private_mean:.2f}")

# Private count

boolean_data = [True, False, True, True, False]

private_count = dp.private_count(boolean_data)

print(f"Private count: {private_count}")

# Private histogram

categorical_data = ['A', 'B', 'A', 'C', 'B', 'A']

bins = ['A', 'B', 'C']

private_hist = dp.private_histogram(categorical_data, bins)

print(f"Private histogram: {private_hist}")

Federated Learning: Train Without Centralizing Data

Federated learning enables training on distributed data without sharing raw data:

from typing import List, Dict, Any

import numpy as np

from collections import OrderedDict

import torch

import torch.nn as nn

class FederatedLearning:

def __init__(self, model: nn.Module, num_clients: int = 10):

self.global_model = model

self.num_clients = num_clients

self.client_models = [model.__class__() for _ in range(num_clients)]

self.training_history = []

def federated_training_round(self, client_data: List[Dict[str, Any]],

num_rounds: int = 10,

clients_per_round: int = 5,

local_epochs: int = 5) -> Dict:

# Federated averaging algorithm

for round_num in range(num_rounds):

# Select random subset of clients

selected_clients = np.random.choice(

self.num_clients,

size=min(clients_per_round, self.num_clients),

replace=False

)

# Local training on each selected client

client_updates = []

client_sizes = []

for client_id in selected_clients:

# Get client data

client_dataset = client_data[client_id]

# Train local model

local_model = self._train_local_model(

self.client_models[client_id],

client_dataset,

local_epochs

)

# Get model parameters

local_params = list(local_model.parameters())

# Compute update (difference from global model)

update = self._compute_update(local_params, self.global_model.parameters())

client_updates.append(update)

client_sizes.append(len(client_dataset))

# Aggregate updates (weighted average by dataset size)

global_update = self._aggregate_updates(client_updates, client_sizes)

# Apply update to global model

self._apply_update(self.global_model, global_update)

# Update client models with global model

for client_id in selected_clients:

self.client_models[client_id].load_state_dict(self.global_model.state_dict())

# Track training

self.training_history.append({

'round': round_num,

'clients_participated': len(selected_clients),

'total_samples': sum(client_sizes)

})

return {

'final_model': self.global_model,

'training_history': self.training_history

}

def _train_local_model(self, model: nn.Module, dataset: List[Dict], epochs: int) -> nn.Module:

# Train model on local data

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

criterion = nn.CrossEntropyLoss()

for epoch in range(epochs):

for sample in dataset:

inputs = torch.tensor(sample['features'], dtype=torch.float32)

labels = torch.tensor(sample['label'], dtype=torch.long)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

return model

def _compute_update(self, local_params: List, global_params: List) -> List:

# Compute parameter update (difference)

update = []

for local_param, global_param in zip(local_params, global_params):

update.append(local_param.data - global_param.data)

return update

def _aggregate_updates(self, updates: List[List], sizes: List[int]) -> List:

# Weighted average of updates

total_size = sum(sizes)

aggregated = []

for i in range(len(updates[0])):

weighted_sum = torch.zeros_like(updates[0][i])

for update, size in zip(updates, sizes):

weighted_sum += update[i] * size

aggregated.append(weighted_sum / total_size)

return aggregated

def _apply_update(self, model: nn.Module, update: List):

# Apply aggregated update to global model

for param, update_val in zip(model.parameters(), update):

param.data += update_val

# Usage

class SimpleNN(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(10, 5)

self.fc2 = nn.Linear(5, 2)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return x

model = SimpleNN()

fl = FederatedLearning(model, num_clients=10)

# Simulate client data (in practice, data stays on client devices)

client_data = [

[{'features': np.random.rand(10), 'label': 0} for _ in range(100)]

for _ in range(10)

]

result = fl.federated_training_round(client_data, num_rounds=10)

Homomorphic Encryption: Compute on Encrypted Data

Homomorphic encryption allows computation on encrypted data without decryption:

# Note: This is a conceptual example. In practice, use libraries like PySEAL or TenSEAL

from typing import List

import numpy as np

class HomomorphicML:

def __init__(self):

# Initialize homomorphic encryption context

# In practice, use a library like TenSEAL

self.context = None # Would be initialized with encryption parameters

self.public_key = None

self.secret_key = None

def encrypt_data(self, data: np.ndarray):

# Encrypt data for homomorphic computation

# Returns encrypted representation

# In practice: return context.encrypt(data)

return EncryptedArray(data) # Placeholder

def homomorphic_addition(self, encrypted_a, encrypted_b):

# Add two encrypted values

# In practice: return encrypted_a + encrypted_b

return EncryptedArray(encrypted_a.data + encrypted_b.data)

def homomorphic_multiplication(self, encrypted_a, plaintext_b: float):

# Multiply encrypted value by plaintext

# In practice: return encrypted_a * plaintext_b

return EncryptedArray(encrypted_a.data * plaintext_b)

def encrypted_inference(self, encrypted_input, model_weights: List[float]):

# Perform inference on encrypted data

# Linear model: y = w1*x1 + w2*x2 + ... + b

result = encrypted_input

for i, weight in enumerate(model_weights[:-1]): # Last is bias

if i == 0:

result = self.homomorphic_multiplication(encrypted_input, weight)

else:

term = self.homomorphic_multiplication(encrypted_input, weight)

result = self.homomorphic_addition(result, term)

# Add bias

bias = model_weights[-1]

result = self.homomorphic_addition(result, self.encrypt_data(np.array([bias])))

return result

def decrypt_result(self, encrypted_result) -> float:

# Decrypt final result

# In practice: return context.decrypt(encrypted_result)

return float(encrypted_result.data)

# Placeholder class for demonstration

class EncryptedArray:

def __init__(self, data):

self.data = data

# Usage example

homomorphic_ml = HomomorphicML()

# Encrypt input

input_data = np.array([1.0, 2.0, 3.0])

encrypted_input = homomorphic_ml.encrypt_data(input_data)

# Model weights (linear model: y = 2*x + 1)

model_weights = [2.0, 1.0] # [weight, bias]

# Perform encrypted inference

encrypted_output = homomorphic_ml.encrypted_inference(encrypted_input, model_weights)

# Decrypt result

result = homomorphic_ml.decrypt_result(encrypted_output)

print(f"Encrypted inference result: {result}")

Secure Multi-Party Computation

Multiple parties can compute functions on their combined data without revealing individual inputs:

from typing import List, Dict

import numpy as np

from cryptography.hazmat.primitives.ciphers import Cipher, algorithms, modes

from cryptography.hazmat.backends import default_backend

import secrets

class SecureMultiPartyComputation:

def __init__(self, num_parties: int = 3):

self.num_parties = num_parties

self.shared_secrets = {}

def secret_sharing(self, value: float, num_shares: int = None) -> List[float]:

# Split secret into shares using additive secret sharing

if num_shares is None:

num_shares = self.num_parties

# Generate random shares

shares = [secrets.randbelow(1000000) / 1000000.0 for _ in range(num_shares - 1)]

# Last share makes sum equal to original value

last_share = value - sum(shares)

shares.append(last_share)

return shares

def reconstruct_secret(self, shares: List[float]) -> float:

# Reconstruct secret from shares

return sum(shares)

def secure_sum(self, party_values: List[float]) -> float:

# Compute sum without revealing individual values

# Each party creates shares of their value

all_shares = []

for value in party_values:

shares = self.secret_sharing(value)

all_shares.append(shares)

# Sum corresponding shares

summed_shares = []

for i in range(len(all_shares[0])):

share_sum = sum(shares[i] for shares in all_shares)

summed_shares.append(share_sum)

# Reconstruct result

return self.reconstruct_secret(summed_shares)

def secure_average(self, party_values: List[float]) -> float:

# Compute average without revealing individual values

secure_sum_result = self.secure_sum(party_values)

return secure_sum_result / len(party_values)

# Usage

smpc = SecureMultiPartyComputation(num_parties=3)

# Each party has a private value

party_values = [10.5, 20.3, 15.7]

# Compute sum without revealing individual values

secure_sum = smpc.secure_sum(party_values)

print(f"Secure sum: {secure_sum}")

# Compute average

secure_avg = smpc.secure_average(party_values)

print(f"Secure average: {secure_avg}")

Choosing the Right Privacy Technique

Different techniques suit different use cases:

| Technique | Privacy Guarantee | Performance Impact | Best For |

|---|---|---|---|

| Differential Privacy | Mathematical guarantee | Low (noise addition) | Aggregations, statistics |

| Federated Learning | Data stays local | Medium (communication overhead) | Distributed training |

| Homomorphic Encryption | Strong (encrypted computation) | High (computational overhead) | Cloud computation on sensitive data |

| Secure MPC | Strong (no data sharing) | High (communication overhead) | Multi-party computation |

Best Practices: Lessons from 10+ Implementations

From implementing privacy-preserving AI across multiple projects:

- Use differential privacy for aggregations: Perfect for statistics, histograms, and aggregated queries. Start with epsilon=1.0 and adjust based on accuracy needs.

- Federated learning for distributed data: When data can’t leave devices or organizations. Use secure aggregation to protect model updates.

- Homomorphic encryption for cloud computation: When you need to compute on encrypted data in the cloud. Use for simple operations (additions, multiplications).

- Data minimization: Collect only necessary data. Less data means less privacy risk.

- Encryption at rest and in transit: Encrypt data at rest and in transit. Use TLS for all communications.

- Access controls: Limit who can access sensitive data. Use role-based access control (RBAC).

- Regular audits: Audit privacy protections regularly. Test for vulnerabilities.

- Privacy by design: Build privacy into systems from the start. Don’t add it as an afterthought.

- Compliance: Meet GDPR, CCPA, HIPAA, and other regulations. Understand requirements for your domain.

- Privacy budget management: Track privacy budget usage. Don’t exhaust it early.

- Test privacy guarantees: Test that privacy mechanisms work. Verify differential privacy parameters.

- Monitor for attacks: Monitor for membership inference and model inversion attacks. Set up alerts.

Privacy Budget Management

Differential privacy uses a privacy budget that must be managed carefully:

class PrivacyBudgetManager:

def __init__(self, total_budget: float = 10.0):

self.total_budget = total_budget

self.used_budget = 0.0

self.query_history = []

def can_query(self, epsilon_cost: float) -> bool:

# Check if query can be made

return (self.used_budget + epsilon_cost) <= self.total_budget

def record_query(self, query_type: str, epsilon_cost: float, result: Any):

# Record query and update budget

if not self.can_query(epsilon_cost):

raise ValueError(f"Insufficient privacy budget. Need {epsilon_cost}, have {self.total_budget - self.used_budget}")

self.used_budget += epsilon_cost

self.query_history.append({

'type': query_type,

'cost': epsilon_cost,

'result': result,

'remaining_budget': self.total_budget - self.used_budget

})

def get_remaining_budget(self) -> float:

return self.total_budget - self.used_budget

def get_budget_usage_report(self) -> Dict:

return {

'total_budget': self.total_budget,

'used_budget': self.used_budget,

'remaining_budget': self.get_remaining_budget(),

'usage_percentage': (self.used_budget / self.total_budget) * 100,

'query_count': len(self.query_history)

}

# Usage

budget_manager = PrivacyBudgetManager(total_budget=10.0)

# Check if query is allowed

if budget_manager.can_query(epsilon_cost=1.0):

# Make query with differential privacy

result = dp.private_aggregate(data, sensitivity=1.0)

budget_manager.record_query('aggregate', epsilon_cost=1.0, result=result)

report = budget_manager.get_budget_usage_report()

print(f"Privacy budget usage: {report['usage_percentage']:.1f}%")

Common Mistakes and How to Avoid Them

What I learned the hard way:

- Exhausting privacy budget: Track budget usage. Plan queries carefully. Don’t use all budget early.

- Incorrect sensitivity calculation: Sensitivity determines noise scale. Underestimate sensitivity and privacy is compromised.

- Ignoring composition: Multiple queries compose. Track total epsilon across all queries.

- Weak encryption: Use strong encryption (AES-256). Weak encryption provides false security.

- No access controls: Limit data access. Everyone shouldn’t have access to everything.

- Poor key management: Secure key storage and rotation. Lost keys mean lost data.

- Ignoring regulations: Understand GDPR, CCPA, HIPAA requirements. Non-compliance is costly.

- No testing: Test privacy mechanisms. Verify they work as expected.

- Over-engineering: Start simple. Add complexity only when needed.

- Ignoring performance: Privacy has performance costs. Balance privacy and performance.

Real-World Example: Healthcare AI with Privacy

We built a healthcare AI system that needed to train on patient data while maintaining HIPAA compliance:

- Challenge: Train model on patient data from multiple hospitals without sharing data

- Solution: Implemented federated learning with secure aggregation

- Privacy measures: Data never left hospitals, model updates encrypted, differential privacy on outputs

- Result: Trained accurate model without violating privacy, passed HIPAA audit

Key learnings: Federated learning works well for healthcare, but requires careful coordination and secure aggregation.

🎯 Key Takeaway

Privacy-preserving AI is essential for sensitive data. Use differential privacy for aggregations, federated learning for distributed training, and homomorphic encryption for cloud computation. Minimize data collection, encrypt everything, and implement strict access controls. With proper privacy protections, you can use AI on sensitive data while protecting individual privacy. The cost of privacy is far less than the cost of a breach.

Measuring Privacy Effectiveness

Key metrics to track:

- Privacy budget usage: Track epsilon consumption over time

- Attack success rate: Test for membership inference and model inversion attacks

- Data leakage incidents: Monitor for unauthorized data access

- Compliance status: Track GDPR, CCPA, HIPAA compliance status

- Performance impact: Measure accuracy loss from privacy mechanisms

Bottom Line

Privacy-preserving AI protects sensitive data while enabling AI capabilities. Use differential privacy, federated learning, and encryption appropriately. Minimize data collection and implement strict access controls. With proper privacy protections, you can deploy AI on sensitive data responsibly. Privacy isn’t optional—it’s fundamental to trustworthy AI.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.