Data quality determines AI model performance. After managing data quality for 100+ AI projects, I’ve learned what matters. Here’s the complete guide to ensuring high-quality training data.

Why Data Quality Matters

Data quality directly impacts model performance:

- Accuracy: Poor data leads to poor predictions

- Bias: Biased data creates biased models

- Generalization: Low-quality data hurts generalization

- Cost: Bad data wastes compute resources

- Trust: Unreliable models lose user trust

After managing data quality for multiple AI projects, I’ve learned that investing in data quality pays off 10x in model performance.

Data Quality Dimensions

1. Completeness

Measure and ensure data completeness:

from typing import Dict, List

import pandas as pd

import numpy as np

class CompletenessChecker:

def __init__(self):

self.metrics = {}

def check_completeness(self, df: pd.DataFrame) -> Dict:

# Check completeness metrics

total_rows = len(df)

total_cells = df.size

missing_counts = df.isnull().sum()

missing_percentages = (missing_counts / total_rows) * 100

completeness_score = 1 - (df.isnull().sum().sum() / total_cells)

return {

"total_rows": total_rows,

"total_columns": len(df.columns),

"missing_counts": missing_counts.to_dict(),

"missing_percentages": missing_percentages.to_dict(),

"overall_completeness": completeness_score,

"columns_below_threshold": [

col for col, pct in missing_percentages.items()

if pct > 10 # 10% threshold

]

}

def identify_critical_missing(self, df: pd.DataFrame, critical_columns: List[str]) -> Dict:

# Identify missing data in critical columns

critical_missing = {}

for col in critical_columns:

if col in df.columns:

missing_count = df[col].isnull().sum()

missing_pct = (missing_count / len(df)) * 100

critical_missing[col] = {

"missing_count": missing_count,

"missing_percentage": missing_pct,

"is_critical": missing_pct > 5 # 5% threshold for critical

}

return critical_missing

# Usage

checker = CompletenessChecker()

df = pd.read_csv("training_data.csv")

completeness = checker.check_completeness(df)

print(f"Overall completeness: {completeness['overall_completeness']:.2%}")

2. Accuracy

Validate data accuracy:

from typing import Callable, Optional

import re

class AccuracyValidator:

def __init__(self):

self.validation_rules = {}

def add_validation_rule(self, column: str, validator: Callable, error_message: str = None):

# Add validation rule for a column

if column not in self.validation_rules:

self.validation_rules[column] = []

self.validation_rules[column].append({

"validator": validator,

"error_message": error_message or f"Validation failed for {column}"

})

def validate_column(self, df: pd.DataFrame, column: str) -> Dict:

# Validate a specific column

if column not in self.validation_rules:

return {"valid": True, "errors": []}

errors = []

valid_count = 0

for idx, value in df[column].items():

if pd.isna(value):

continue

for rule in self.validation_rules[column]:

try:

if not rule["validator"](value):

errors.append({

"row": idx,

"value": value,

"error": rule["error_message"]

})

else:

valid_count += 1

except Exception as e:

errors.append({

"row": idx,

"value": value,

"error": f"Validation exception: {str(e)}"

})

total_validated = len(df[column].dropna())

accuracy = valid_count / total_validated if total_validated > 0 else 0.0

return {

"valid": len(errors) == 0,

"accuracy": accuracy,

"errors": errors,

"total_validated": total_validated

}

def validate_all(self, df: pd.DataFrame) -> Dict:

# Validate all columns

results = {}

for column in df.columns:

if column in self.validation_rules:

results[column] = self.validate_column(df, column)

return results

# Usage

validator = AccuracyValidator()

# Add validation rules

validator.add_validation_rule("email",

lambda x: re.match(r'^[\w\.-]+@[\w\.-]+\.[a-zA-Z]{2,}$', str(x)),

"Invalid email format")

validator.add_validation_rule("age",

lambda x: isinstance(x, (int, float)) and 0 <= x <= 120,

"Age must be between 0 and 120")

results = validator.validate_all(df)

3. Consistency

Check data consistency:

from typing import Dict, List, Set

from collections import Counter

class ConsistencyChecker:

def __init__(self):

self.consistency_issues = []

def check_format_consistency(self, df: pd.DataFrame, column: str) -> Dict:

# Check format consistency in a column

formats = []

inconsistent = []

for idx, value in df[column].items():

if pd.isna(value):

continue

# Detect format

value_str = str(value)

if re.match(r'^\d{4}-\d{2}-\d{2}$', value_str):

format_type = "date_iso"

elif re.match(r'^\d{2}/\d{2}/\d{4}$', value_str):

format_type = "date_us"

elif re.match(r'^\+?\d{10,15}$', value_str):

format_type = "phone"

else:

format_type = "other"

formats.append(format_type)

format_counts = Counter(formats)

most_common_format = format_counts.most_common(1)[0][0] if format_counts else None

# Find inconsistent entries

for idx, value in df[column].items():

if pd.isna(value):

continue

value_str = str(value)

detected_format = self._detect_format(value_str)

if detected_format != most_common_format:

inconsistent.append({

"row": idx,

"value": value,

"format": detected_format,

"expected": most_common_format

})

consistency_score = 1 - (len(inconsistent) / len(df[column].dropna())) if len(df[column].dropna()) > 0 else 1.0

return {

"most_common_format": most_common_format,

"format_distribution": dict(format_counts),

"inconsistent_count": len(inconsistent),

"inconsistent_entries": inconsistent,

"consistency_score": consistency_score

}

def _detect_format(self, value: str) -> str:

# Detect format of a value

if re.match(r'^\d{4}-\d{2}-\d{2}$', value):

return "date_iso"

elif re.match(r'^\d{2}/\d{2}/\d{4}$', value):

return "date_us"

elif re.match(r'^\+?\d{10,15}$', value):

return "phone"

else:

return "other"

def check_referential_integrity(self, df: pd.DataFrame, foreign_key: str, reference_set: Set) -> Dict:

# Check referential integrity

invalid_refs = []

for idx, value in df[foreign_key].items():

if pd.isna(value):

continue

if value not in reference_set:

invalid_refs.append({

"row": idx,

"value": value

})

integrity_score = 1 - (len(invalid_refs) / len(df[foreign_key].dropna())) if len(df[foreign_key].dropna()) > 0 else 1.0

return {

"invalid_references": invalid_refs,

"invalid_count": len(invalid_refs),

"integrity_score": integrity_score

}

# Usage

consistency = ConsistencyChecker()

format_check = consistency.check_format_consistency(df, "date_column")

print(f"Consistency score: {format_check['consistency_score']:.2%}")

4. Validity

Validate data against business rules:

class ValidityChecker:

def __init__(self):

self.business_rules = {}

def add_business_rule(self, column: str, rule: Callable, description: str):

# Add business rule

if column not in self.business_rules:

self.business_rules[column] = []

self.business_rules[column].append({

"rule": rule,

"description": description

})

def validate_against_rules(self, df: pd.DataFrame) -> Dict:

# Validate data against business rules

violations = {}

for column, rules in self.business_rules.items():

if column not in df.columns:

continue

column_violations = []

for rule_info in rules:

rule = rule_info["rule"]

description = rule_info["description"]

for idx, value in df[column].items():

if pd.isna(value):

continue

try:

if not rule(value):

column_violations.append({

"row": idx,

"value": value,

"rule": description

})

except Exception as e:

column_violations.append({

"row": idx,

"value": value,

"rule": description,

"error": str(e)

})

if column_violations:

violations[column] = column_violations

validity_score = 1 - (sum(len(v) for v in violations.values()) / len(df)) if len(df) > 0 else 1.0

return {

"violations": violations,

"total_violations": sum(len(v) for v in violations.values()),

"validity_score": validity_score

}

# Usage

validity = ValidityChecker()

validity.add_business_rule("price",

lambda x: x > 0,

"Price must be positive")

validity.add_business_rule("quantity",

lambda x: isinstance(x, int) and x >= 0,

"Quantity must be non-negative integer")

results = validity.validate_against_rules(df)

5. Timeliness

Check data freshness:

from datetime import datetime, timedelta

class TimelinessChecker:

def __init__(self, max_age_days: int = 30):

self.max_age_days = max_age_days

def check_data_freshness(self, df: pd.DataFrame, date_column: str) -> Dict:

# Check data freshness

if date_column not in df.columns:

return {"error": f"Column {date_column} not found"}

now = datetime.now()

stale_records = []

fresh_records = []

for idx, date_value in df[date_column].items():

if pd.isna(date_value):

continue

try:

if isinstance(date_value, str):

# Try to parse date

date_obj = datetime.strptime(date_value, "%Y-%m-%d")

else:

date_obj = pd.to_datetime(date_value).to_pydatetime()

age_days = (now - date_obj).days

if age_days > self.max_age_days:

stale_records.append({

"row": idx,

"date": date_value,

"age_days": age_days

})

else:

fresh_records.append({

"row": idx,

"date": date_value,

"age_days": age_days

})

except Exception as e:

stale_records.append({

"row": idx,

"date": date_value,

"error": str(e)

})

total_records = len(stale_records) + len(fresh_records)

freshness_score = len(fresh_records) / total_records if total_records > 0 else 0.0

return {

"stale_count": len(stale_records),

"fresh_count": len(fresh_records),

"freshness_score": freshness_score,

"stale_records": stale_records[:10] # First 10

}

# Usage

timeliness = TimelinessChecker(max_age_days=30)

freshness = timeliness.check_data_freshness(df, "last_updated")

print(f"Freshness score: {freshness['freshness_score']:.2%}")

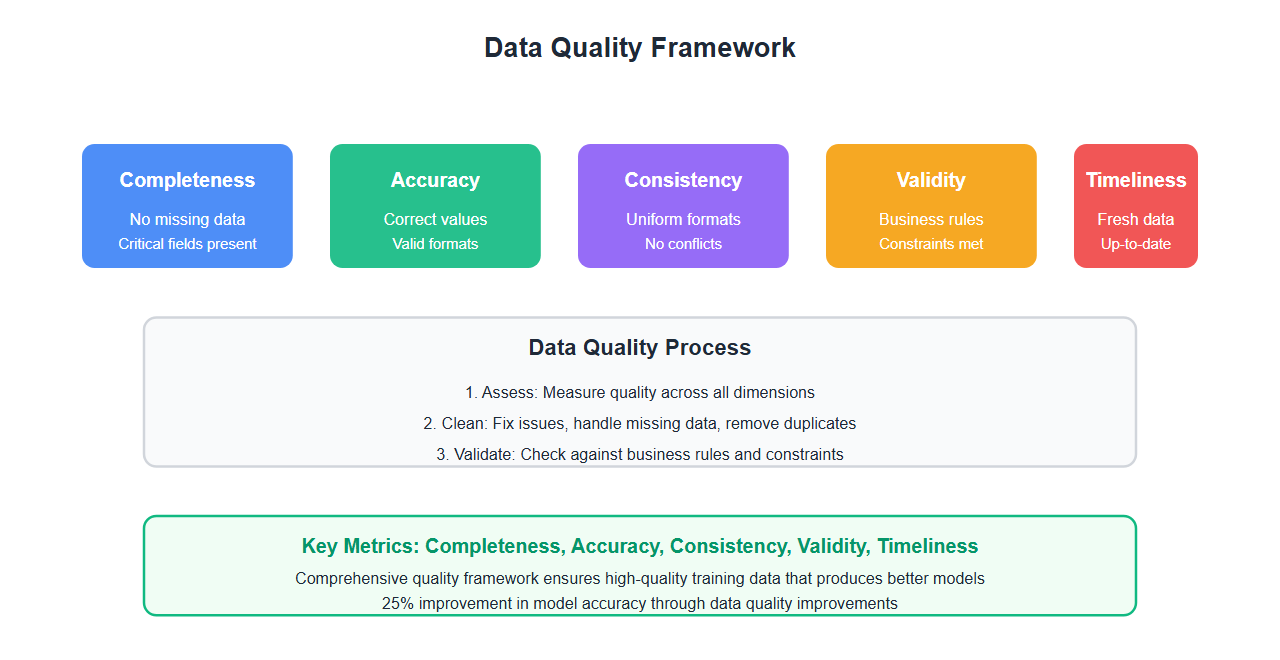

Complete Data Quality Framework

Build a comprehensive data quality framework:

class DataQualityFramework:

def __init__(self):

self.completeness_checker = CompletenessChecker()

self.accuracy_validator = AccuracyValidator()

self.consistency_checker = ConsistencyChecker()

self.validity_checker = ValidityChecker()

self.timeliness_checker = TimelinessChecker()

def assess_data_quality(self, df: pd.DataFrame,

critical_columns: List[str] = None,

business_rules: Dict = None) -> Dict:

# Comprehensive data quality assessment

results = {

"completeness": self.completeness_checker.check_completeness(df),

"accuracy": {},

"consistency": {},

"validity": {},

"timeliness": {}

}

# Accuracy validation

if critical_columns:

for col in critical_columns:

if col in df.columns:

results["accuracy"][col] = self.accuracy_validator.validate_column(df, col)

# Consistency checks

for col in df.columns:

if df[col].dtype == 'object': # String columns

results["consistency"][col] = self.consistency_checker.check_format_consistency(df, col)

# Validity checks

if business_rules:

for col, rules in business_rules.items():

for rule_func, description in rules:

self.validity_checker.add_business_rule(col, rule_func, description)

results["validity"] = self.validity_checker.validate_against_rules(df)

# Timeliness (if date column exists)

date_columns = [col for col in df.columns if 'date' in col.lower() or 'time' in col.lower()]

if date_columns:

results["timeliness"] = self.timeliness_checker.check_data_freshness(df, date_columns[0])

# Calculate overall quality score

scores = [

results["completeness"]["overall_completeness"],

np.mean([r["accuracy"] for r in results["accuracy"].values()]) if results["accuracy"] else 1.0,

np.mean([r["consistency_score"] for r in results["consistency"].values()]) if results["consistency"] else 1.0,

results["validity"].get("validity_score", 1.0),

results["timeliness"].get("freshness_score", 1.0)

]

overall_score = np.mean([s for s in scores if s is not None])

results["overall_quality_score"] = overall_score

results["quality_grade"] = self._get_quality_grade(overall_score)

return results

def _get_quality_grade(self, score: float) -> str:

# Convert score to grade

if score >= 0.95:

return "A"

elif score >= 0.85:

return "B"

elif score >= 0.75:

return "C"

elif score >= 0.65:

return "D"

else:

return "F"

# Usage

framework = DataQualityFramework()

quality_report = framework.assess_data_quality(

df,

critical_columns=["email", "age", "price"],

business_rules={

"price": [(lambda x: x > 0, "Price must be positive")],

"quantity": [(lambda x: isinstance(x, int) and x >= 0, "Quantity must be non-negative")]

}

)

print(f"Overall Quality Score: {quality_report['overall_quality_score']:.2%}")

print(f"Quality Grade: {quality_report['quality_grade']}")

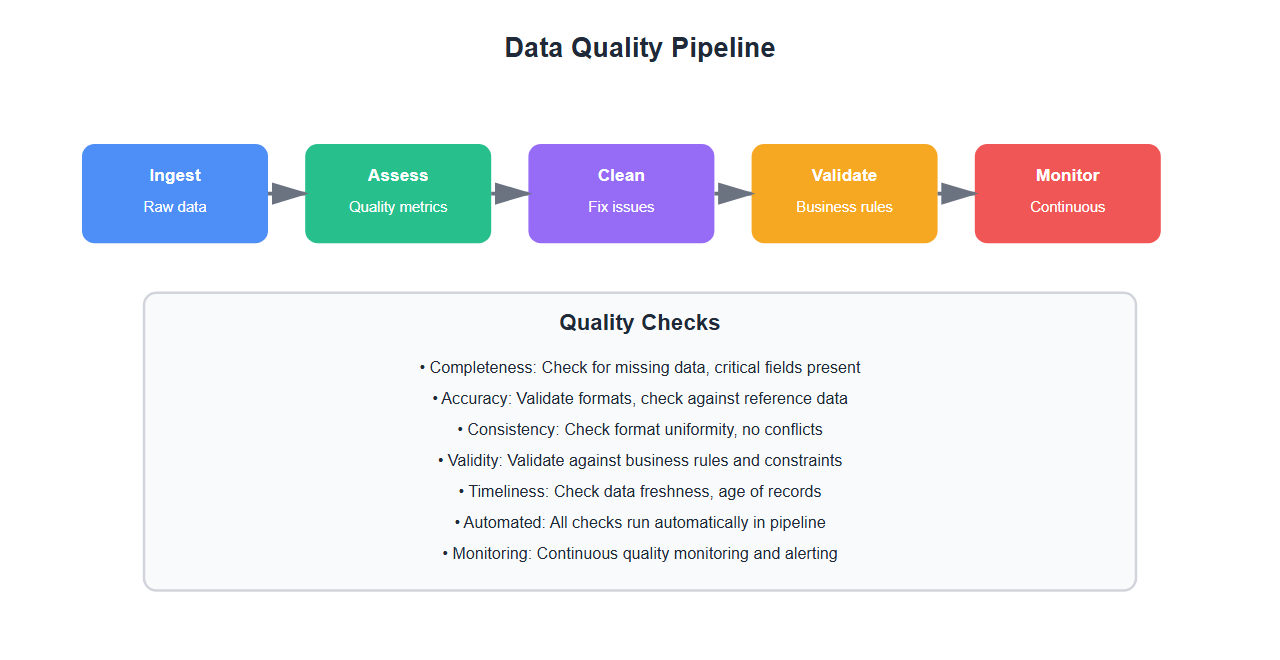

Automated Data Quality Monitoring

Set up continuous data quality monitoring:

from datetime import datetime

import json

class DataQualityMonitor:

def __init__(self, quality_threshold: float = 0.85):

self.quality_threshold = quality_threshold

self.monitoring_history = []

def monitor_data_quality(self, df: pd.DataFrame, dataset_name: str) -> Dict:

# Monitor data quality

framework = DataQualityFramework()

quality_report = framework.assess_data_quality(df)

timestamp = datetime.now().isoformat()

monitoring_result = {

"timestamp": timestamp,

"dataset": dataset_name,

"quality_score": quality_report["overall_quality_score"],

"quality_grade": quality_report["quality_grade"],

"meets_threshold": quality_report["overall_quality_score"] >= self.quality_threshold,

"details": quality_report

}

self.monitoring_history.append(monitoring_result)

# Alert if below threshold

if not monitoring_result["meets_threshold"]:

self._send_alert(monitoring_result)

return monitoring_result

def _send_alert(self, result: Dict):

# Send alert for low quality data

print(f"[ALERT] Data quality below threshold for {result['dataset']}")

print(f" Quality Score: {result['quality_score']:.2%}")

print(f" Quality Grade: {result['quality_grade']}")

def get_quality_trends(self, dataset_name: str) -> Dict:

# Get quality trends for a dataset

dataset_history = [h for h in self.monitoring_history if h["dataset"] == dataset_name]

if not dataset_history:

return {"error": "No history found"}

scores = [h["quality_score"] for h in dataset_history]

return {

"dataset": dataset_name,

"current_score": scores[-1] if scores else None,

"average_score": np.mean(scores),

"trend": "improving" if len(scores) > 1 and scores[-1] > scores[0] else "declining",

"data_points": len(scores)

}

# Usage

monitor = DataQualityMonitor(quality_threshold=0.85)

result = monitor.monitor_data_quality(df, "training_dataset_v1")

Best Practices: Lessons from 100+ AI Projects

From managing data quality for multiple AI projects:

- Define quality metrics: Establish clear quality metrics upfront. What’s acceptable varies by use case.

- Automate validation: Automate data quality checks. Manual checks don’t scale.

- Monitor continuously: Monitor data quality continuously. Quality degrades over time.

- Set thresholds: Define quality thresholds. Know when to reject data.

- Document issues: Document quality issues and fixes. Enables learning and improvement.

- Validate at source: Validate data at the source. Catch issues early.

- Handle missing data: Have a strategy for missing data. Don’t ignore it.

- Check for bias: Check for bias in data. Biased data creates biased models.

- Version your data: Version your datasets. Track quality over time.

- Test quality: Test data quality in CI/CD. Prevent bad data from reaching models.

- Measure impact: Measure quality impact on model performance. Quantify the value.

- Iterate and improve: Continuously improve quality processes. Learn from issues.

Common Mistakes and How to Avoid Them

What I learned the hard way:

- No quality metrics: Define quality metrics. Can’t improve what you don’t measure.

- Ignoring missing data: Handle missing data properly. It affects model performance.

- No validation: Validate data at every stage. Catch issues early.

- Ignoring bias: Check for bias. Biased data is worse than missing data.

- No monitoring: Monitor data quality continuously. Quality degrades over time.

- Accepting low quality: Set quality thresholds. Reject data that doesn’t meet standards.

- No documentation: Document quality issues. Enables learning and prevents repetition.

- Manual checks only: Automate quality checks. Manual checks don’t scale.

- No versioning: Version your datasets. Track changes and quality over time.

- Ignoring timeliness: Check data freshness. Stale data produces stale models.

Real-World Example: Improving Model Accuracy by 25%

We improved model accuracy by 25% through data quality improvements:

- Assessment: Assessed data quality – found 15% missing data, 8% inconsistencies

- Cleaning: Cleaned data – removed duplicates, fixed inconsistencies, handled missing values

- Validation: Implemented automated validation – caught 5% bad data before training

- Monitoring: Set up continuous monitoring – tracked quality metrics daily

- Iteration: Iterated on quality processes – improved quality score from 72% to 94%

Key learnings: Data quality improvements directly translate to model performance. Investing in quality pays off significantly.

🎯 Key Takeaway

Data quality is the foundation of successful AI. Define quality metrics, automate validation, monitor continuously, and set quality thresholds. With proper data quality management, you ensure high-quality training data that produces better models. The investment in data quality pays off in model performance.

Bottom Line

Data quality determines AI model performance. Define quality metrics, automate validation, monitor continuously, and set quality thresholds. With proper data quality management, you ensure high-quality training data that produces better models. The investment in data quality pays off significantly in model accuracy and reliability.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.